Decentralized Identity Governance: A Zero-Trust Approach in AI

TL;DR

Agentic AI Identity Framework

Traditional Identity and Access Management (IAM) systems are inadequate for the dynamic nature of AI agents operating in Multi Agent Systems (MAS). A novel Agentic AI - IAM framework is needed due to the limitations of existing protocols when applied to MAS. These limitations include coarse-grained controls, a single-entity focus, and a lack of context-awareness. The proposed framework leverages Decentralized Identifiers (DIDs) and Verifiable Credentials (VCs) to encapsulate an agent’s capabilities, provenance, behavioral scope, and security posture. Cloud Security Alliance paper listed initial reasons and approach. This paper expanded on our previous paper and proposed a more robust approach.

Key Components

The Agentic AI IAM framework includes several key components:

- Agent Naming Service (ANS) for secure and capability-aware discovery

- Dynamic fine-grained access control mechanisms

- Unified global session management and policy enforcement layer for real-time control

- Zero-Knowledge Proofs (ZKPs) for privacy-preserving attribute disclosure and verifiable policy compliance.

Traditional IAM Limitations in MAS

Traditional IAM protocols like OAuth 2.0, OpenID Connect (OIDC), and SAML are insufficient for Multi-Agent Systems (MAS) due to the dynamic, decentralized, and interconnected nature of MAS. Microsoft Entra ID forms the backbone of current identity management for human users and traditional IT systems.

- Coarse-Grained and Static Permissions: Traditional protocols rely on pre-defined scopes or roles that are too broad for AI agents needing granular, task-specific permissions. AI agents in MAS frequently require granular, task-specific permissions that can change dynamically based on context, mission objectives, or real-time data analysis.

- Single-Entity Focus vs. Complex Delegations: Current protocols are designed for single authenticated principals, struggling with complex delegation chains where agents spawn sub-agents or act on behalf of multiple principals. Protocols are architected around a single authenticated principal (user or application).

- Limited Context Awareness: IAM decisions lack understanding of runtime context, agent intent, or risk level. Traditional IAM decisions are largely based on static roles or scopes, with minimal understanding of the runtime context, agent intent, or associated risk level.

- Scalability Issues: Managing authentication events and tokens for numerous ephemeral agents overwhelms traditional IAM infrastructure. Traditional IAM infrastructure can be overwhelmed by the volume of authentication events and tokens.

- Dynamic Trust Models: Agents need to authenticate each other across organizational boundaries without a pre-existing trust fabric. Peer-to-peer trust establishment between autonomous agents from different trust domains is not natively supported.

- NHI Proliferation: Each autonomous agent may require Non-Human Identities (NHIs) for numerous APIs, databases, and services, leading to an exponential growth in secrets that must be securely stored, rotated, and managed. NHIs for numerous APIs, databases, and services, leading to an exponential growth in secrets that must be securely stored, rotated, and managed

- Global Logout/Revocation Complexity: Ensuring immediate and comprehensive revocation of access rights across all systems upon agent compromise is challenging. Fragmented revocation mechanisms can leave lingering access.

Unique Challenges Posed by Agentic AI

Agentic AI introduces complexities beyond protocol mismatches:

- Autonomy and Unpredictability: Autonomous agents can make decisions not explicitly programmed, challenging static policy definitions.

- Ephemerality and Dynamic Lifecycles: Rapid creation, cloning, and destruction of agents make managing identities with persistent credentials risky. An ”ephemeral authentication” approach is needed.

- Evolving Capabilities and Intent: Agents can adapt behavior and goals over time, requiring adaptable IAM systems.

- Verifiable Provenance and Accountability: Tracing actions back to specific agent instances and ensuring non-repudiation is crucial.

- Preventing Privilege Escalation: Agents might probe their environment to grant themselves higher privileges if not carefully constrained.

- Risks of Over-Scoping Access and Permissions: Agents will actively explore and utilize every permission available to them.

- Secure and Efficient Cross-Agent Communication & Collaboration: The need for secure, low-overhead authentication and authorization between them becomes paramount.

- Actions Taken May Not Directly Correlate to Human Requests: An IAM system must be able to discern between when an action is taken at the direct request of a human, and when it is the result of an agentic decision.

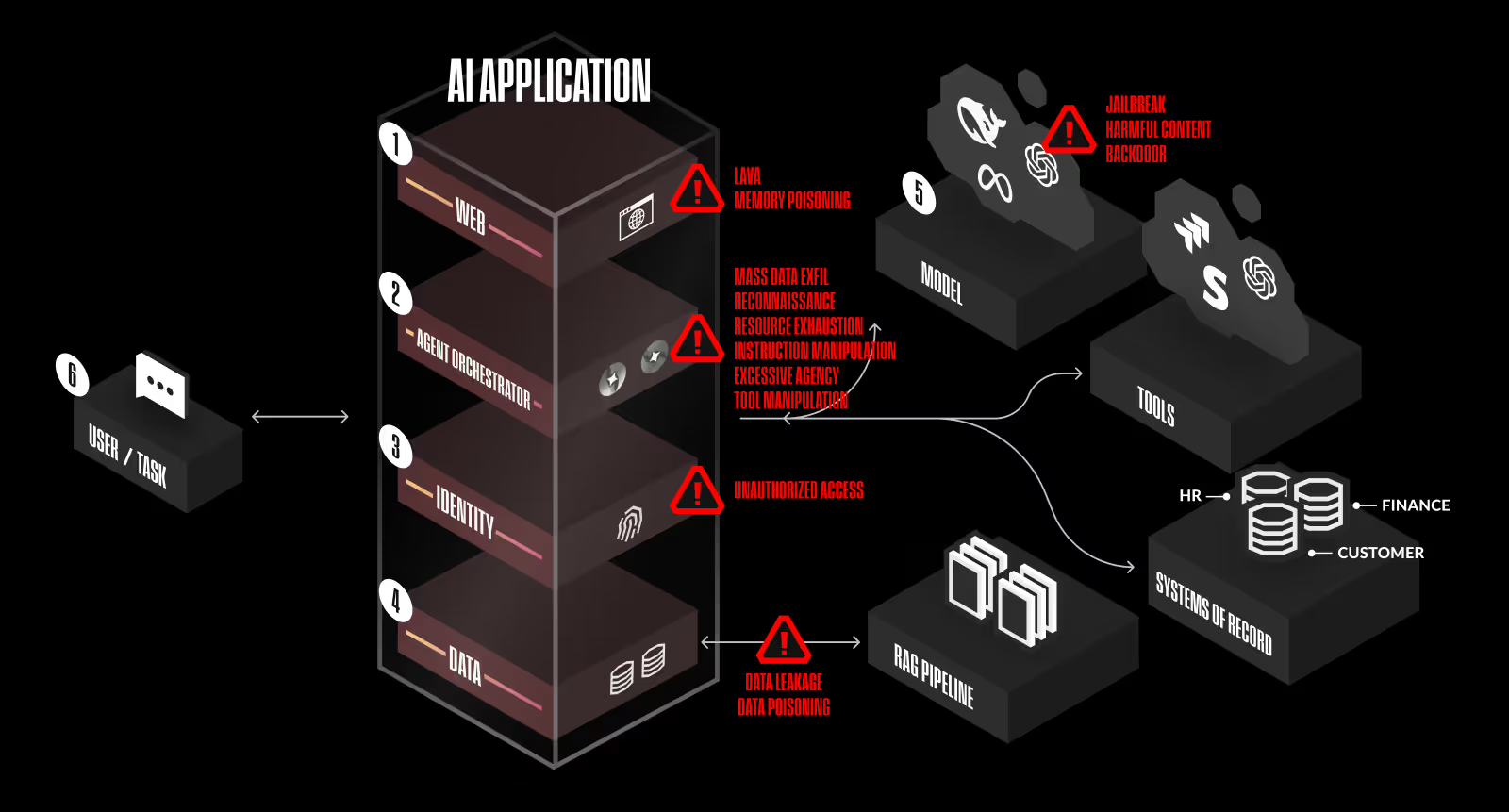

AI Security: Key Pillars

Securing AI-native systems requires decomposing the application stack into critical risk areas, defined as six layers:

- Web

- Agent Orchestrator

- Identity

- Data

- Model

- User Behavior

Thinking along these categories helps identify relevant risks and apply appropriate mitigations.

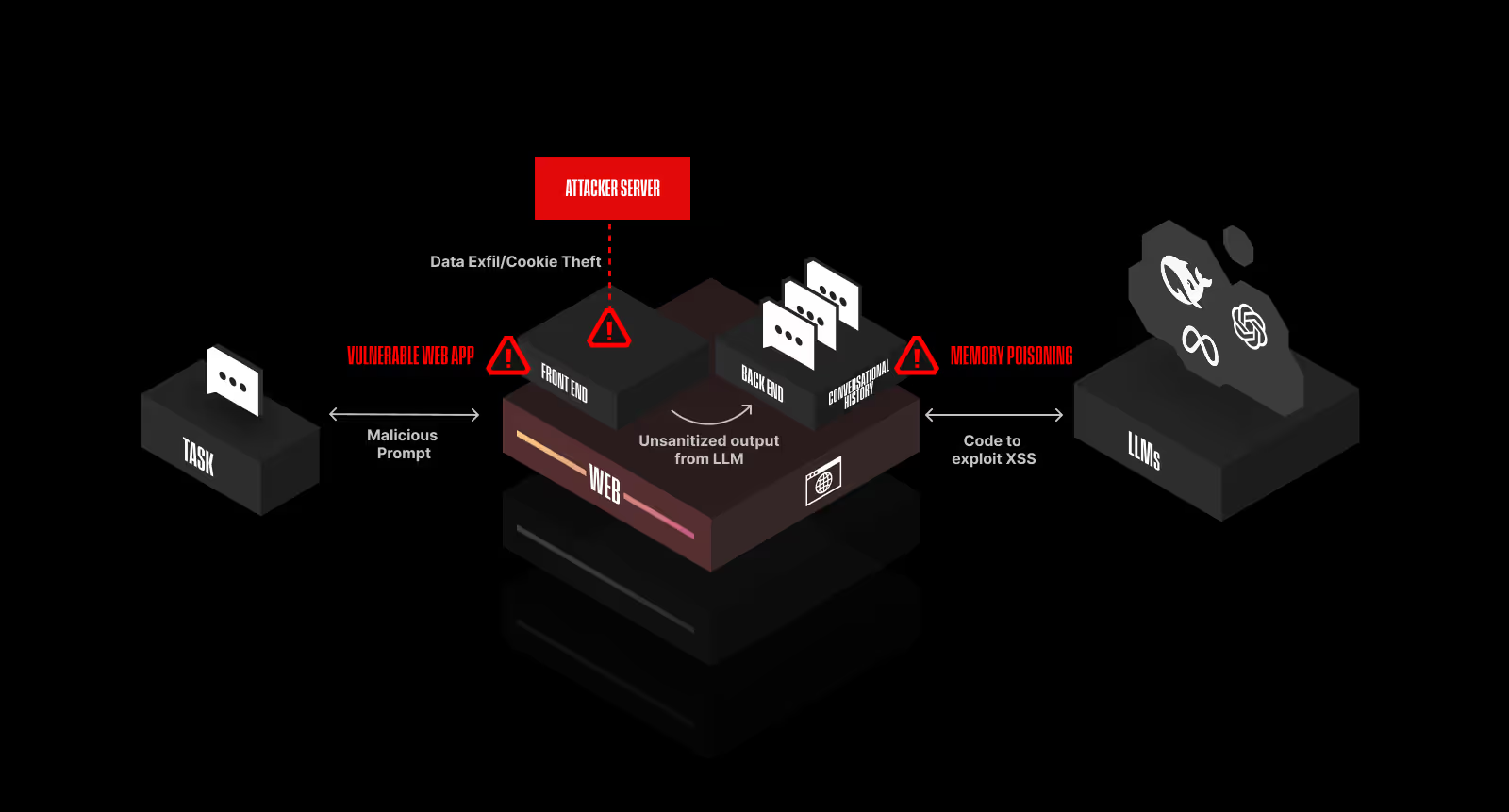

Web Layer Security

Image courtesy of Straiker

The web layer includes traditional application logic with new architectural requirements for AI-powered applications. Storing and managing conversational history or memory enables natural language interfaces to provide personalization, maintain context across interactions, and improve efficiency. The introduction of natural language interfaces blurs the line between user input and system logic, creating dynamic and unpredictable execution paths. AI-powered applications interact with users, agents, APIs, and other AI models, making identity management more complex than traditional authentication and authorization models.

Language Augmented Vulnerabilities in Applications (LAVA)

Language Augmented Vulnerabilities in Applications (LAVA), is a new class of security threats that arise at the intersection of traditional application vulnerabilities and AI-driven language capabilities. Unlike conventional exploits that target software flaws directly, LAVA attacks emerge from the way applications interpret and embed AI-generated content to trigger unintended application behavior, expose sensitive data, or bypass security controls. Security threats that arise at the intersection of traditional application vulnerabilities and AI-driven language capabilities.

- Growing Cyber Threat: 75% of applications tested responded with unsanitized AI-generated code.

- Mitigation: AI-generated outputs are required to be sanitized, validated, and continuously monitored for exploitation.

Memory Poisoning

In many AI applications, conversational history is persistently stored. Persistent storage is critical for enabling personalization, context awareness, efficiency, continuity across sessions and consistency. Enterprises are increasingly wary of scenarios where a malicious instruction is embedded within a stored conversation, potentially triggering unintended or harmful behavior in future interactions. Malicious instruction is embedded within a stored conversation, potentially triggering unintended or harmful behavior in future interactions.

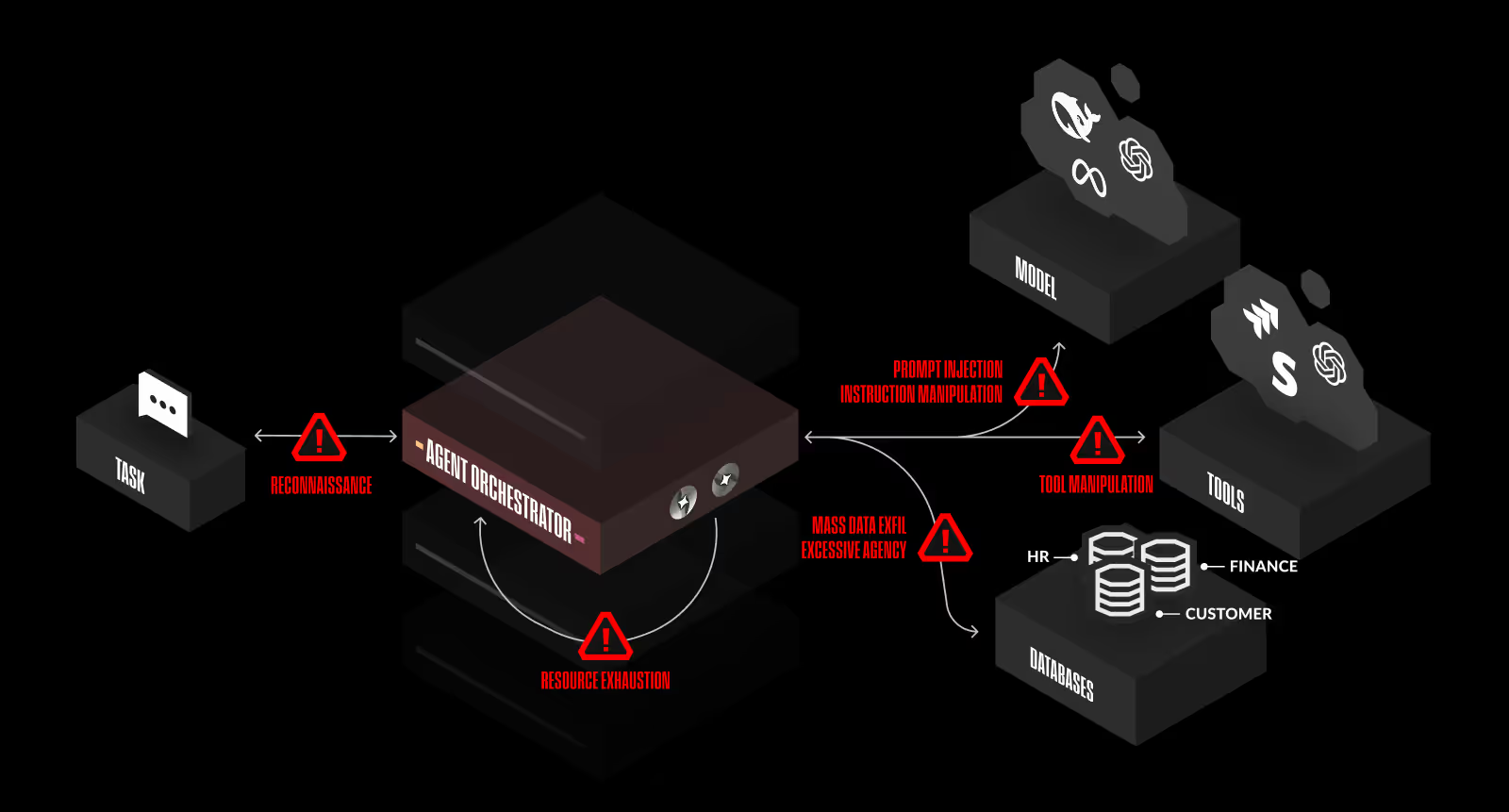

Agent Orchestrator Security

Image courtesy of Straiker

Agents are rapidly emerging as one of the most exciting evolutions of AI yet high-risk challenges for AI security. Security challenges for AI security. Unlike static AI models, agents operate autonomously, execute complex workflows, and integrate external tools and APIs, making them susceptible to a broad range of novel attack techniques. Attack techniques for AI Security.

Risks to Agentic Workflows

- Reconnaissance: Attackers can map out the agentic workflow to gather intelligence about how agents interact with tools, APIs, and other systems.

- Instruction Manipulation: Attackers can influence an agent’s input to alter its reasoning or inject malicious directives.

- Tool Manipulation: Attackers can gain control over an API response or file input to manipulate how the agent interprets data.

- Excessive Agency: Agents with broad permissions may autonomously initiate actions beyond their intended scope.

- Resource Exhaustion: Adversaries can overload the system with excessive instructions or API calls, consuming all available resources.

Identity Management

As AI systems become more deeply integrated into enterprise environments, identity has emerged as a critical challenge. Critical challenge for AI security. AI-driven applications interact with users, agents, APIs, and other AI models, making identity management more complex than traditional authentication and authorization models. Traditional authentication and authorization models. The risk of unauthorized access, excessive agency, and cross-user context leaks poses significant security concerns, particularly in multi-user and multi-agent environments where access control mechanisms must evolve beyond standard session-based protections. Access control mechanisms must evolve beyond standard session-based protections.

Data Security

Image courtesy of Straiker

Enterprises need to connect internal knowledge bases like SharePoint to their RAGs. This rich internal data combined with the tremendous intelligence of LLMs delivers unparalleled value to customers of these AI applications. Tremendous intelligence of LLMs delivers unparalleled value to customers of these AI applications.

Sensitive Data Leaks

- Failure Rate: 26% failure rate across AI applications as it pertained to sensitive data leaks.

- Data Types: Data ranged from AWS access keys to internal phone numbers and email addresses.

- Attack Vector: Language became the attack vector, allowing sensitive data to be exposed by cleverly worded prompts.

Data Poisoning

The deliberate manipulation or injection of malicious, misleading, or biased information into the data sources used by AI applications that rely on RAG is data poisoning. Data poisoning: Fraught with external and user controlled content like wikis, SharePoint, Confluence, or indexed web content, poisoning in this layer can compromise the integrity of the generated responses. Compromise the integrity of the generated responses.

Model Security

While model security evaluations such as detecting backdoors in .pkl files or guarding against supply chain manipulation remain important, enterprises are demanding more testing around how models perform in the context of their applications. Supply chain manipulation remain important, enterprises are demanding more testing around how models perform in the context of their applications. Risks associated with jailbreaking, memory poisoning and contextual grounding will be more critical than standalone model testing. Standalone model testing.

Jailbreaking (LLM Evasion)

- Vulnerability Rate: 75% of tested applications were vulnerable to jailbreak attacks.

- Consequences: Attackers can manipulate responses, exfiltrate sensitive data, and bypass security controls without direct code execution.

- LLM Evasion: Attackers can manipulate responses, exfiltrate sensitive data, and bypass security controls without direct code execution.

User Behavior

Leveraging natural language as a weapon, adversaries may launch multi-turn manipulation campaigns, evade filters with subtle shifts in tone or phrasing, or simply overwhelm systems with AI-driven automation designed to probe and exploit application boundaries. Exploit application boundaries. Traditional authentication and session management are no longer enough. Session management are no longer enough.

- AI native behavioral baselining, identifying users deviating from normal patterns.

- Anomaly detection in natural language, monitoring for sentiment shifts, escalation in complexity, or chaining of evasive patterns.

- Correlating behavioral anomalies with network signals like adversarial geographies, impossible travel, high-velocity login attempts, or geolocation mismatches.